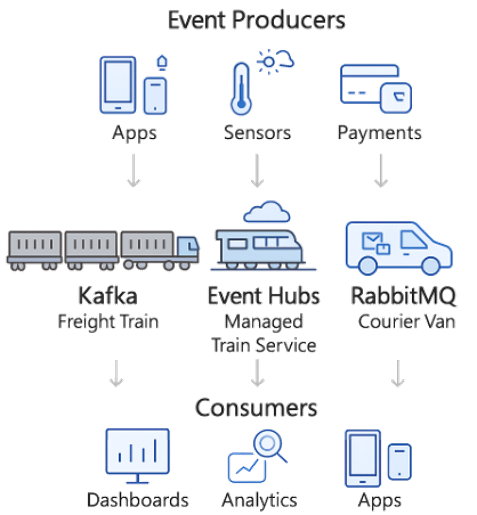

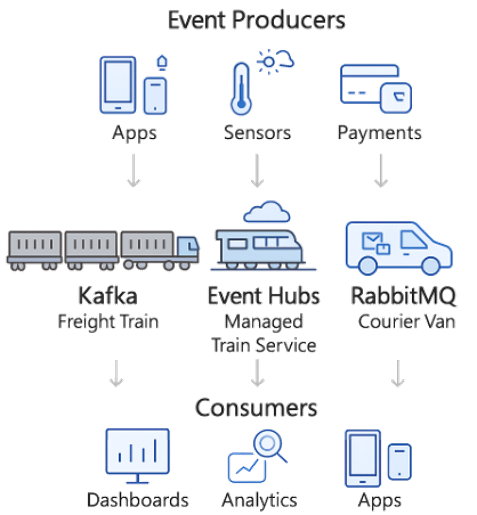

The following diagram shows how different messaging technologies — Kafka, Event Hubs, and RabbitMQ — fit into a real-time pipeline.

At the top, we have our event producers: applications generating user activity, IoT sensors sending telemetry, and financial systems pushing transactions. These are the raw sources of events.

Now, those events need to get transported reliably to downstream consumers. That’s where our three options come in:

- Kafka, on the left, is like a freight train. It’s open source, distributed, and designed to move massive volumes of events continuously. It’s ideal for streaming pipelines at very large scale.

- Event Hubs, in the middle, is essentially the managed version of Kafka on Azure. You get the same freight train power, but as a managed service. You don’t worry about the tracks or the engines — Azure handles that for you. It integrates directly with Fabric, which makes it especially attractive if you’re already in the Microsoft ecosystem.

- RabbitMQ, on the right, is like a courier van. It’s not designed to move massive volumes, but it’s incredibly reliable and flexible for routing and guaranteed delivery. If you need every message delivered exactly once — even if the consumer is offline for a bit — RabbitMQ is your best option.

Finally, at the bottom, we have the consumers: dashboards, analytics systems, or applications that depend on this event data. The key is that each broker delivers data in a way that best matches its design philosophy: high throughput (Kafka), managed scale (Event Hubs), or guaranteed precision (RabbitMQ).

2026-02-14