SQL: Plan Cache Pollution - Avoiding it and Fixing it

While SQL Server’s plan cache generally is self-maintaining, poor application coding practices can cause the plan cache to become full of query plans that have only ever been used a single time and that are unlikely to ever be reused. We call this plan cache pollution.

Causes

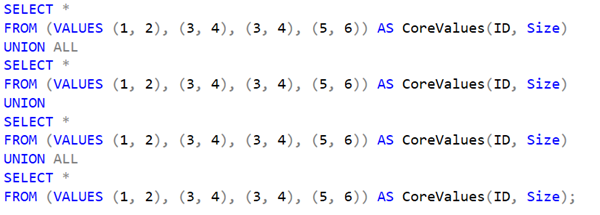

The most common cause of these issues are programming libraries that send multiple variations of a single query. For example, imagine I have a query like:

2026-03-05