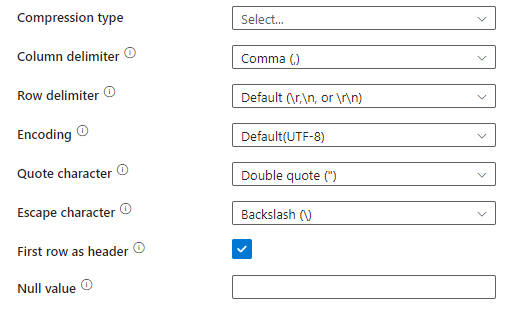

SSIS: Reading pipe delimited text and selecting particular output columns

There was a question on the Q&A forums today, asking how to read data using SSIS, when it’s in this format:

|Col1| |Col2|Col3|Col|

|101| |A|21|DC|

One of the concerns was that there was a leading pipe. This is not a problem. When you have data like that, and you set | as the delimiter, because there are 6 delimiters, then there are 7 columns output. These are the values:

Column 1: blank string Column 2: 101 Column 3: blank string Column 4: A Column 5: 21 Column 6: DC Column 7: blank string

2024-07-19