Azure Data Factory (ADF) - Issues with parsing strings in CSVs

It’s scary how much of the world’s data lives in CSVs. Yet, as a standard, it’s problematic. So many implementations just don’t work as expected. Today, I had a client asking about why Azure Data Factory wouldn’t read a CSV that, while odd, was in a valid format.

The simplest equivalent of the file that wouldn’t load, would be one like this:

First Column,Second Column,Third Column,Fourth Column

12,Terry Johnson,Paul Johnson,031-23423

13,Mary Johnson,"Paul,Johnson",031-23423

14,Mia Johnson,"Paul ""the beast"", Johnson",031-23423

16,Cherry Johnson,Paul Johnson,031-23423

There are meant to be four columns. The source system wrapped strings in quotes only when the string contained a comma, as that was the delimiter for the file.

But the fourth line in that file would not load. ADF would split it across multiple columns. It was not interpreting the double-double-quotes.

I was puzzled at first, as the file seemed ok. If I upload that file an any of the common online CSV file validators or linters, they all said it was ok.

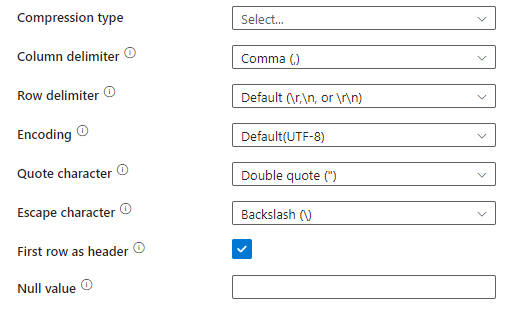

The client had configured the dataset as follows:

The options seemed ok to them.

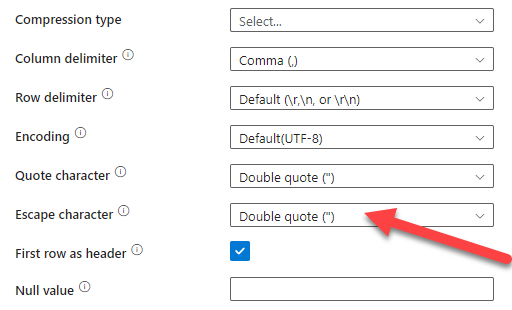

Turns out the issue was with the escape character. ADF defaulted to using a backslash. For the majority of CSV files that I see, it would need to be a double-quote:

And sure enough, with that change in place, it loaded files similar to the one above without issue.

I don’t know why that’s not the default for CSV files in ADF but I do hope it helps someone else.

2023-09-12