Fabric RTI 101: Message Queue Comparisons

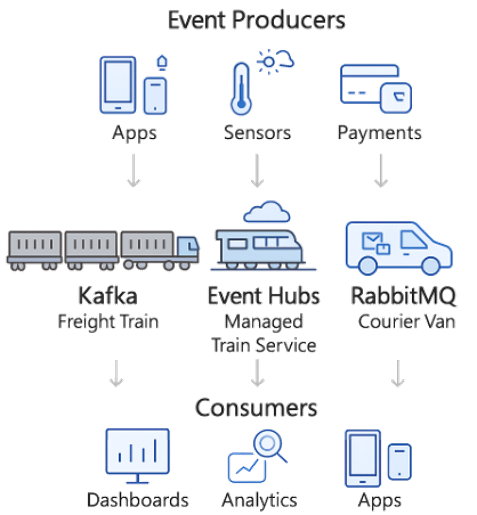

The following diagram shows how different messaging technologies — Kafka, Event Hubs, and RabbitMQ — fit into a real-time pipeline.

At the top, we have our event producers: applications generating user activity, IoT sensors sending telemetry, and financial systems pushing transactions. These are the raw sources of events.

Now, those events need to get transported reliably to downstream consumers. That’s where our three options come in:

- Kafka, on the left, is like a freight train. It’s open source, distributed, and designed to move massive volumes of events continuously. It’s ideal for streaming pipelines at very large scale.

- Event Hubs, in the middle, is essentially the managed version of Kafka on Azure. You get the same freight train power, but as a managed service. You don’t worry about the tracks or the engines — Azure handles that for you. It integrates directly with Fabric, which makes it especially attractive if you’re already in the Microsoft ecosystem.

- RabbitMQ, on the right, is like a courier van. It’s not designed to move massive volumes, but it’s incredibly reliable and flexible for routing and guaranteed delivery. If you need every message delivered exactly once — even if the consumer is offline for a bit — RabbitMQ is your best option.

Finally, at the bottom, we have the consumers: dashboards, analytics systems, or applications that depend on this event data. The key is that each broker delivers data in a way that best matches its design philosophy: high throughput (Kafka), managed scale (Event Hubs), or guaranteed precision (RabbitMQ).

The diagram really emphasizes that while all three technologies move events from producers to consumers, they do so in different ways, optimized for different use cases.

Comparison Summary

| Feature | Kafka (Apache) | Event Hubs (Azure) | RabbitMQ |

|---|---|---|---|

| Type | Open-source event streaming | Managed Kafka-compatible service | Open-source message broker |

| Scale | Millions of events/sec, very high throughput | Millions of events/sec, cloud-native scaling | Lower throughput, not built for firehose streams |

| Focus | Streaming pipelines, analytics | Cloud-native streaming, Azure integration | Reliable messaging, task queues, routing |

| Management | You manage clusters, infra | Fully managed by Azure | Lightweight, self-managed |

| Reliability | Fault-tolerant, distributed | Fault-tolerant, auto-scaled by Azure | Strong delivery guarantees, ack/retry |

| Use Cases | Real-time analytics, log processing, IoT telemetry | Same as Kafka + Azure workloads integration | Enterprise messaging, workflows, transactional jobs |

| Analogy | Freight train (moves huge loads) | Managed freight train service | Courier service (guaranteed delivery, routing flexibility) |

This table helps us compare Kafka, Event Hubs, and RabbitMQ side by side. Kafka is the open-source workhorse — massive throughput, distributed, and highly scalable, but you have to manage the infrastructure yourself. Event Hubs is Microsoft’s managed equivalent — Kafka-compatible, but with Azure taking care of scaling, upgrades, and integration into Fabric. RabbitMQ is a different beast: it’s more about reliable messaging and complex routing patterns than raw throughput. Think of it as the courier service — it makes sure every message gets delivered exactly once, but it won’t move the same volume as a Kafka stream.

So the choice depends on your workload: streaming analytics → Kafka or Event Hubs; transactional messaging → RabbitMQ.

Learn more about Fabric RTI

If you really want to learn about RTI right now, we have an online on-demand course that you can enrol in, right now. You’ll find it at Mastering Microsoft Fabric Real-Time Intelligence

2026-02-14