ADF: Passing a file attachment via a POST to a REST service using Azure Data Factory

I was recently doing work for a client where I needed to replace some Python code that was being used to upload a file attachment to a REST based service using a POST command. I was trying to do it using a Web Activity in Azure Data Factory. What was surprising was how little information I could find on how to do that.

While I can’t post all the code, I did want to post the core parts here in case it helps others, and for my own future reference. The basic steps involve:

- Put the file into a storage account

- Read the file into a variable

- Construct the body of the POST call, including the attachment

- Send the POST call using a Web Activity

- Test the REST-based call

Put the file into a storage account

Ideally, the Web Activity would have a way to just point to a file in an Azure Storage Account and get it to attach that file to calls. It doesn’t have that option.

What it does have is an option to include a dataset in the body of the call.

I was immediately hopeful and tried that, and while it was interesting, it didn’t include the data as an attachment. It just appended it into the body of the call.

So let’s load up a file into a variable and use the variable to provide part of the body of the web call.

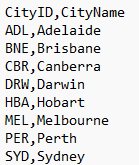

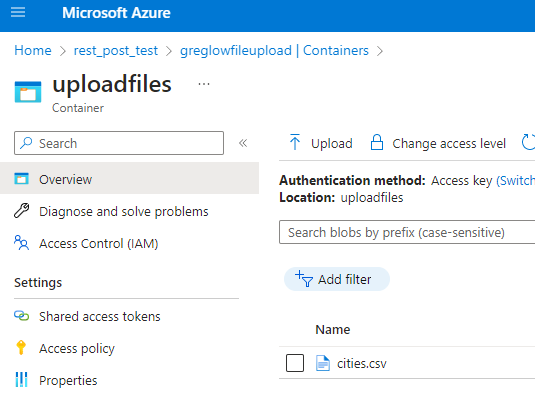

First, I’ve created a resource group called rest_post_test using the Azure portal, then created a storage account called greglowfileupload. I created a text file called cities.csv with the following contents:

And I uploaded it to a container called uploadfiles.

Read the file into a variable

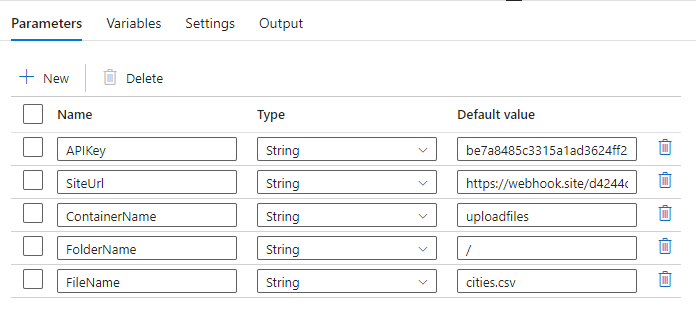

I created an Azure pipeline called RestUploadTest, and added the following parameters:

The APIKey was provided by the REST service and is used to log on, instead of using other forms of authentication.

The SiteURL is the address that the POST is being sent to. I’ve used a test site that I’ll talk about in a moment.

The ContainerName, FolderName, and FileName are the location of the file in the storage account. (It is not in a subfolder)

All of these values would normally be read from a database, but for simplicity, I’ve made them parameters to the pipeline.

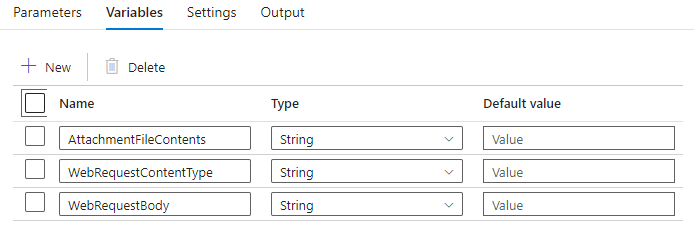

I then added the following variables:

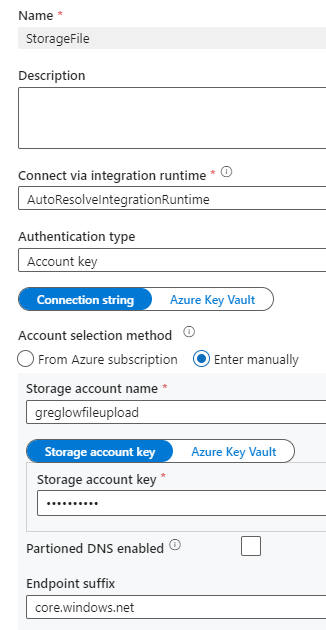

Next I added a linked service StorageFile to connect to the storage account.

Note that I would normally use a Key Vault for holding key details for the storage account, but I’ve gone for simplicity in this demo.

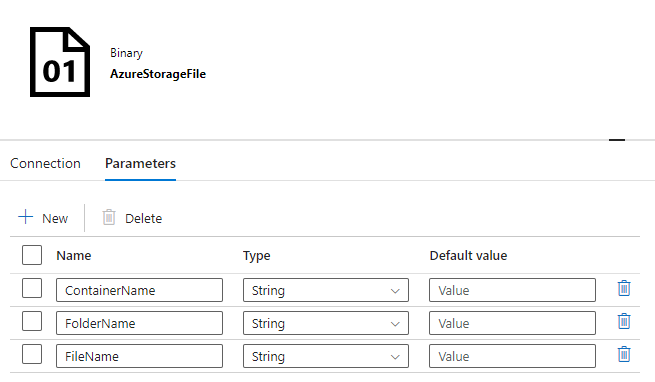

To read the file from storage, we can use a Lookup Activity, but it requires a Dataset, so we create the dataset first. I called it StorageTextFile. I like to make datasets reusable, so I added parameters to it:

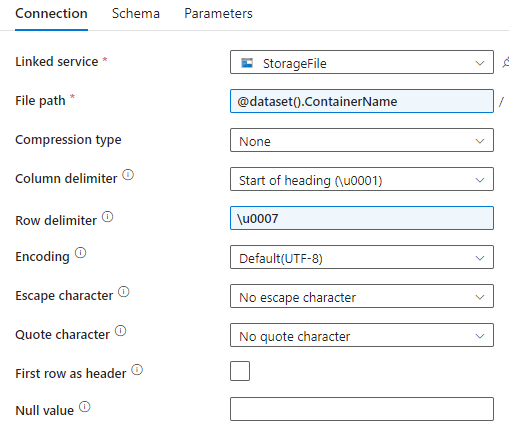

Then I configured those parameters as dynamic values in the Connection tab:

Note that because I used a Delimited Text File as the dataset type, I had to configure a column and row delimiter. I chose values that will not occur in my file. I used the preview option to make sure I could read the data.

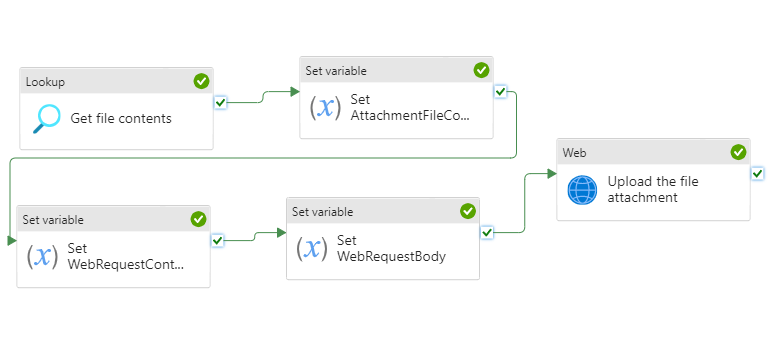

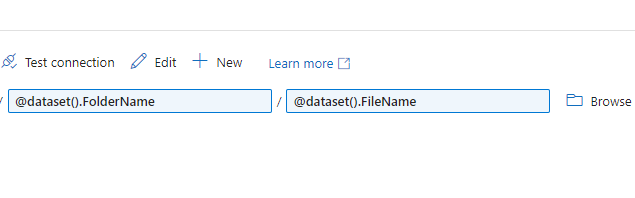

In the pipeline, I added a Lookup Activity called Get File Contents, configured the timeout as 20 minutes (not the default of 12 hours !) and set the details as follows:

The dataset properties were populated dynamically from the pipeline parameters.

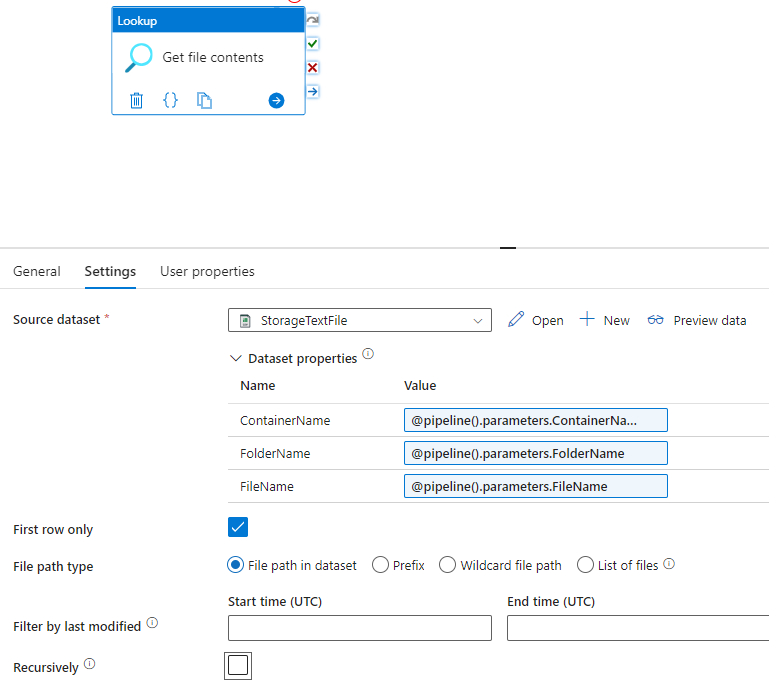

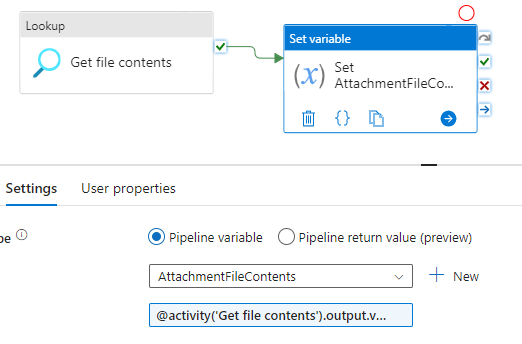

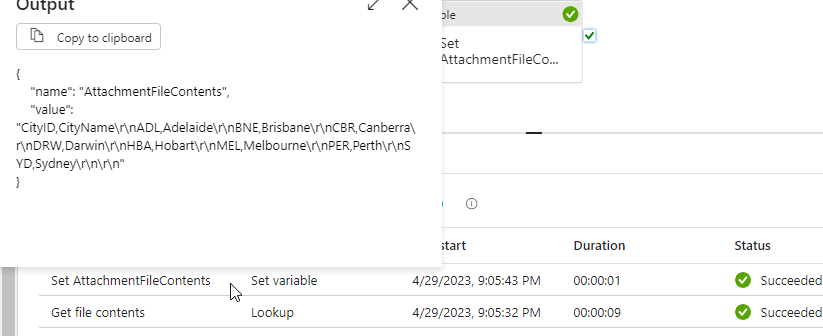

Finally, I added a Set Variable Activity called Set AttachmentFileContents.

The dynamic code for setting the variable was:

@activity('Get file contents').output.firstRow.Prop_0

I used the output of the Get file contents activity, and got the first row from it. Finally, because I didn’t use the first row as a header row, it allocated its own headers. In this case, I have one column called Prop_0.

And then tested the code up to this point. I clicked on the output of the Set AttachmentFileContents Activity and can see the values. (Encoded in JSON in this viewer).

All good to here. We have the file loaded into a variable.

Construct the body of the POST call, including the attachment

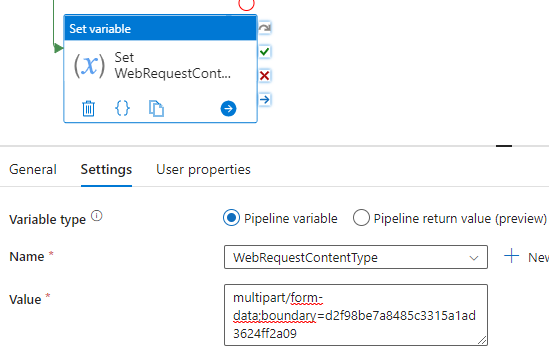

The next two things I need to configure are the variables that are holding the content type and body for the web request. I put them in variables separately to make it easier to debug.

First, I configured the web request content type variable:

The value was actually:

multipart/form-data;boundary=d2f98be7a8485c3315a1ad3624ff2a09

This is multipart form data, and because the body can contain many values, you need a boundary that’s used to separate them.

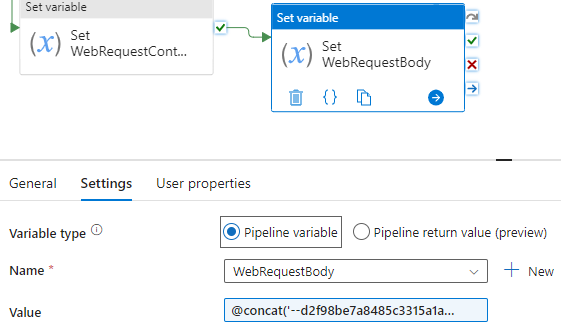

Then the body values:

The value was comprised of:

@concat('--d2f98be7a8485c3315a1ad3624ff2a09

Content-Disposition: form-data; name="file"; filename="',pipeline().parameters.FileName,'"

Content-Type: text/csv

',variables('AttachmentFileContents'),'

--d2f98be7a8485c3315a1ad3624ff2a09--

')

Note: the positioning of the carriage return/linefeeds in this is critical. You can’t just concat them by using ‘\r’ or ‘\n’. I’ve found that doesn’t work. The line breaks need to be in the dynamic values.

Also note: you’ll see the boundary value in here, but the first time I used it, I added a double-dash prefix, and at the end, I used a double-dash prefix and suffix.

Now we have the pieces ready for the Web Activity.

Note on Line Endings

I found this works for most web servers, but some web servers are fussy about how new lines are formed. Some are happy to just get a line feed (LF) character, others want a carriage return and line feed (CRLF).

If you only supply a LF and it wants a CRLF, the error returned is normally one that says you’ve reached the end of the stream unexpectedly (or similar words). In that case, you’ll need to adjust the body to have consistent line endings with CRLF.

The replace() function in ADF works fine, but you can’t just specify ‘\n’ or ‘\r’, etc. I’ve found you need to use the base64 encodings to get what’s needed. (Suggestion from fellow MVP Simon Sabin)

They are as follows:

- Instead of ‘\r’ write base64ToString(‘DQ==’)

- Instead of ‘\n’ write base64ToString(‘Cg==’)

- Instead of ‘\r\n’ write base64ToString(‘DQo=’)

Send the POST call using a Web Activity

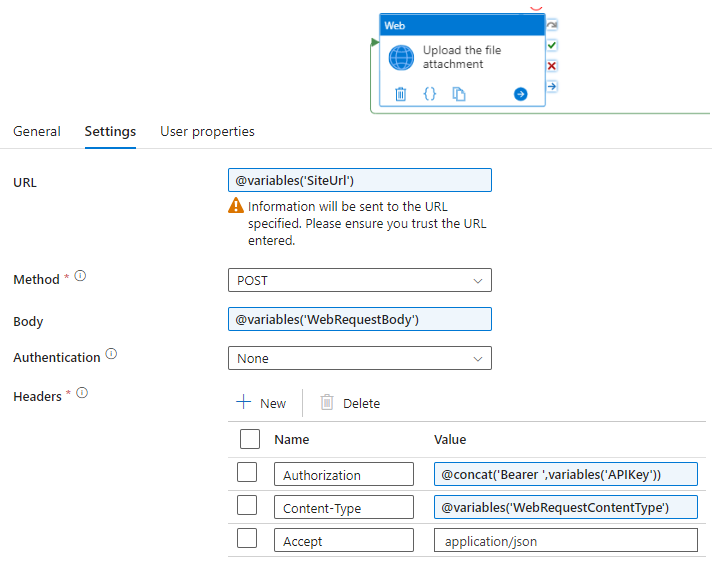

I then added a Web Activity called Upload the file attachment:

Note the Authorization header contained:

@concat('Bearer ',pipeline().parameters.APIKey)

That supplies the API key as the bearer.

Note also: you shouldn’t need the Accept header but I’ve found some services complain if it isn’t there. The other reason for having it set to application/json is so that when an error occurs, you can read it without having to decode it as you would with gzip compression, etc.

Uploading Larger Files

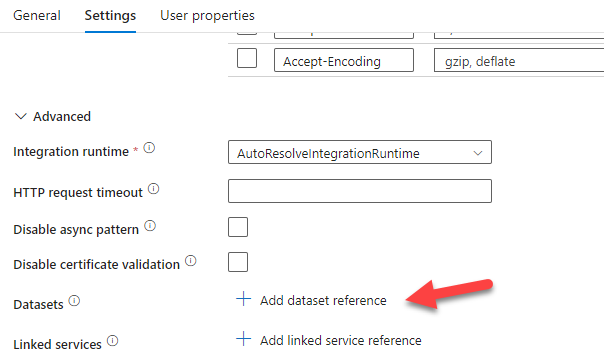

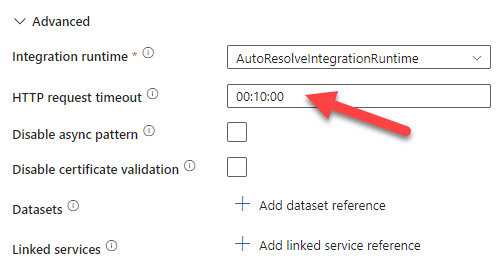

If you are uploading larger files, there are two things to consider:

- I’m not sure what the current maximum size is for an ADF variable, but the method that I’ve used assumes that the whole file will fit in a variable. It’s pretty large though.

- In the advanced settings for the Web Activity, there is a setting for an HTTP Request Timeout. That defaults to 1 minute. If the upload will take longer than that, you’ll need to increase it. It has a 10 minute maximum value.

Test the REST-based call

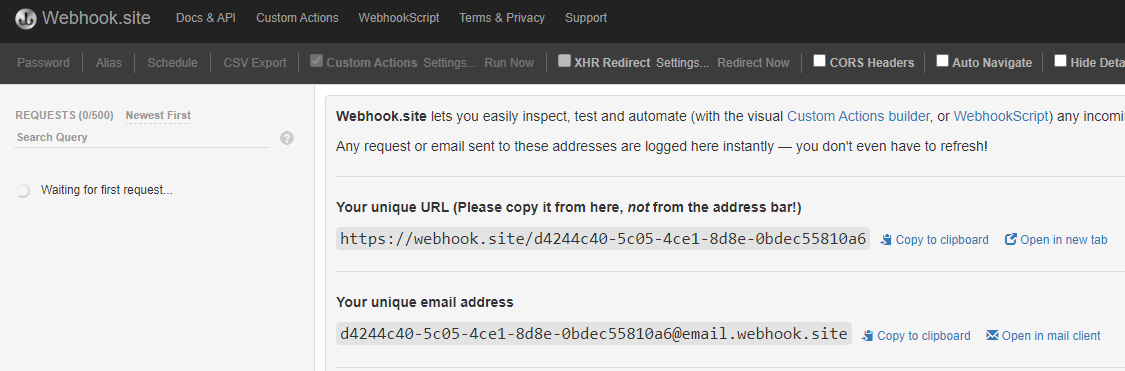

Now we get to test it. What we need is something to catch the requests while we’re testing. The easiest site I’ve found for this is webhook.site. You can set up a site that listens on a URL for free. It’s awesome. When you start it, it gives you a temporary URL to use:

That’s the URL that I used earlier as a pipeline parameter. Let’s test the POST by debugging the pipeline.

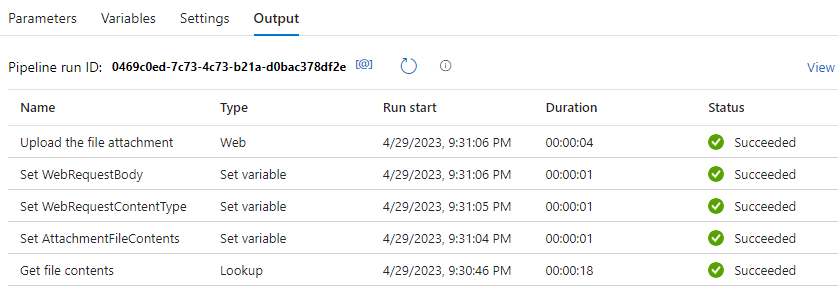

First, we make sure the pipeline ran as expected:

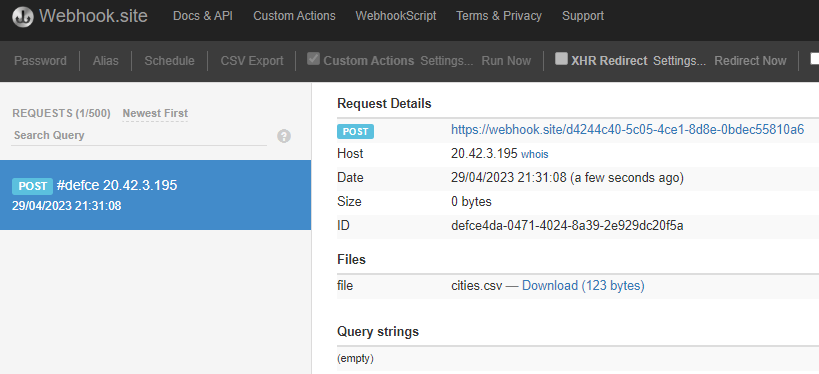

When we check the website, we see the request received, and with the file attachment!

Lower-level debugging

While this site is great when debugging most things, at first I struggled with it. The problem was that when I had malformed requests, I wished that it would just show me the raw data that it was receiving. I needed to compare my requests to other well-formed requests. I asked the authors and it can’t do that. (At least not right now)

What did help, is that I found a GitHub project from Janne Mattila, called Echo. You’ll find it here: https://github.com/JanneMattila/Echo . It looks like Janne also has a hosted version of it available here.

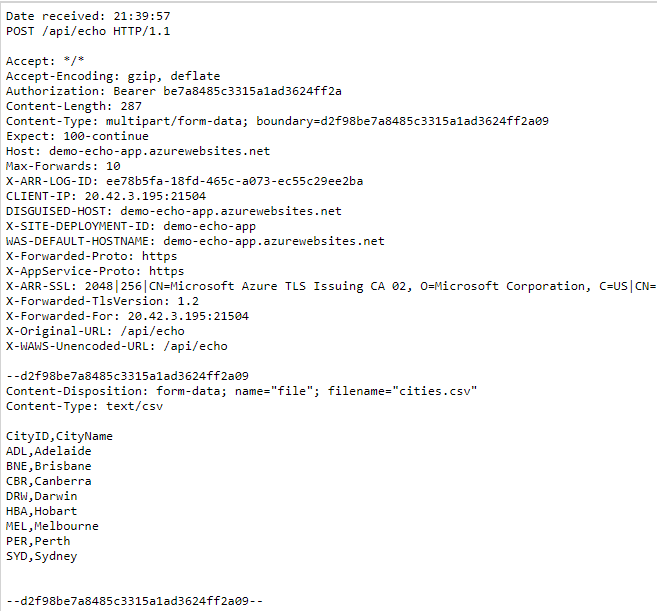

I decided to build that project and deploy it to an Azure Website, in case I wanted to modify it. And it was so very helpful. It just shows you the detail of everything it receives, rather than formatting the request:

Thanks Janne !

I know this has been a longer blog post than normal but I hope it helps someone work this out.

2023-04-29