SQL: Are big SQL Server databases really slower?

One question that I’m asked all the time when consulting is whether reducing the size of database tables will make queries run faster or not.

The underlying question is typically about whether the client should implement some sort of archiving strategy, to reduce the amount of data in a table by moving older data off into another table.

My answer is that it might help, but if it does, you probably have another issue that would be a better one to solve instead.

When you need to read some data from a table, and you know where the data is, it doesn’t matter how much other data is in the table. The only time that the amount of data matters is if you don’t know where the data you are looking for is located.

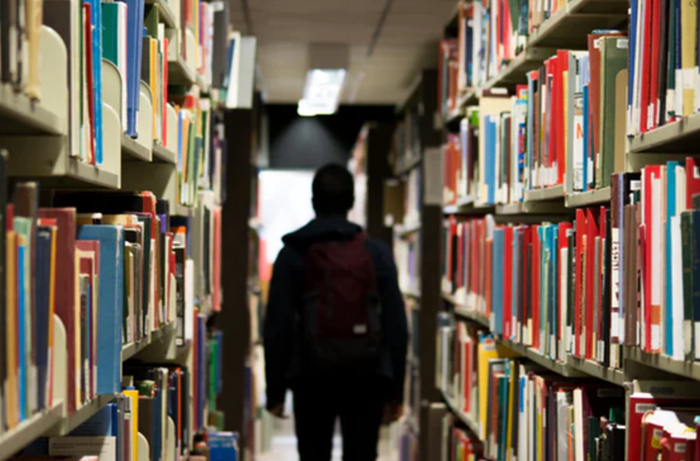

Imagine you have walked into a physical library with a very large number of books. If you look in an index of authors to find the book by your favorite author that you want to read, and it tells you exactly where it is in the library, does it really make a difference if the library is expanded to double the number of books?

Image by J Zamora

Image by J Zamora

You might have to walk a little further, but the process is still much the same, and the time taken will be much the same. But if you didn’t have that index of authors, it makes a huge difference, because now you’d have to look through twice as many books to find the one that you need.

Having more data in a table than you need to access is only a problem if you are reading all that data.

The tell-tale signs of this sort of problem are obvious. If you have queries that get slower as the system holds more data, you probably have an issue to solve, and it’s likely an indexing problem.

2018-02-05