T-SQL 101: 45 Upper and lower case conversion of strings in SQL Server

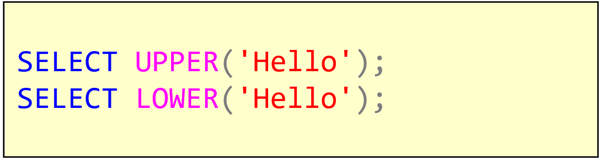

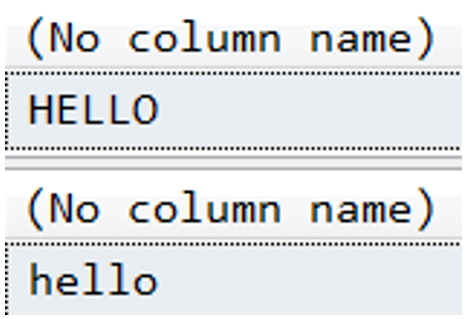

If you need to convert strings to all upper case or all lower case, you can use the UPPER and LOWER functions in T-SQL as shown below:

These are simple functions and it doesn’t matter what those strings were to start with, it will just do the case conversion.

It’s also worth noting that if you want to make your SQL query text change to upper or lower case in SQL Server Management Studio, there’s a shortcut for that too. If you want to just quickly convert a string to all uppercase or all lower case, just highlight the string and hit control shift U for upper or control shift L for lower.

2019-11-25